Query Parsing

Overview

This example demonstrates how to scan query history from a data warehouse and save it in the Tokern Lineage engine. The app automatically parses and extracts data lineage from the queries.

The example consists of the following sequence of operations:

- Start docker containers containing a demo. Refer to docs for detailed instructions on installing demo-wikimedia.

- Scan and send queries from query history to data lineage app.

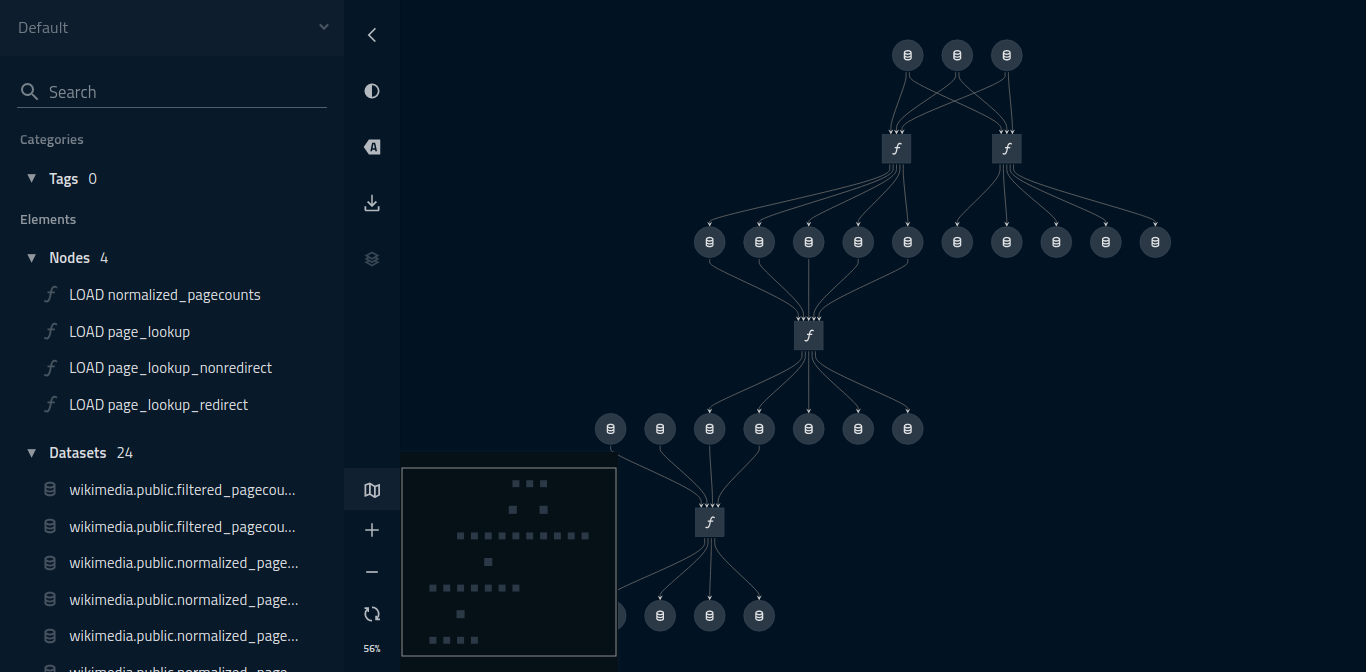

- Visualize the graph by visiting Tokern UI.

- Analyze the graph

Download queries for the wikimedia demo from Github

Install Wikimedia demo

This demo requires wikimedia demo to be running. Start the demo using the following instructions:

# in a new directory run

wget https://raw.githubusercontent.com/tokern/data-lineage/master/install-manifests/docker-compose/wikimedia-demo.yml

# or run

curl https://raw.githubusercontent.com/tokern/data-lineage/master/install-manifests/docker-compose/wikimedia-demo.yml -o docker-compose.yml

Run docker-compose

docker-compose up -d

Verify containers are running

docker container ls | grep tokern

# Required configuration for API and wikimedia database network address

docker_address = "http://127.0.0.1:8000"

wikimedia_db = {

"username": "etldev",

"password": "3tld3v",

"uri": "tokern-demo-wikimedia",

"port": "5432",

"database": "wikimedia"

}

from data_lineage import Catalog

catalog = Catalog(docker_address)

# Register wikimedia datawarehouse with data-lineage app.

source = catalog.add_source(name="wikimedia", source_type="postgresql", **wikimedia_db)

# Scan the wikimedia data warehouse and register all schemata, tables and columns.

catalog.scan_source(source)

# Read queries from a json file. Note that this is for demo only

with open("queries.json", "r") as file:

queries = json.load(file)

from datetime import datetime

from data_lineage import Analyze

analyze = Analyze(docker_address)

for query in queries:

print(query)

analyze.analyze(**query, source=source, start_time=datetime.now(), end_time=datetime.now())

Visit Kedro UI